如何对向量函数进行求导?

知识回顾

在大一的时候,我们学过多元复合函数进行求导。

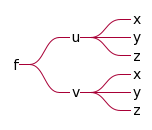

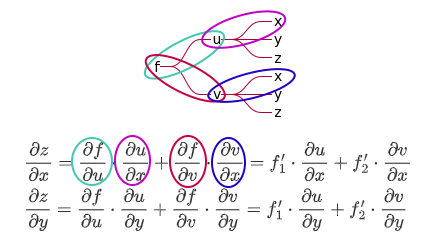

对于 $z=f(u(x, y), v(x, y))$ ,依赖关系为:

对 $x,y$ 偏导数为:

$$ \begin{array}{l} \dfrac{\partial z}{\partial x}=\dfrac{\partial f}{\partial u} \cdot \dfrac{\partial u}{\partial x}+\dfrac{\partial f}{\partial v} \cdot \dfrac{\partial v}{\partial x}=f_{1}^{\prime} \cdot \dfrac{\partial u}{\partial x}+f_{2}^{\prime} \cdot \dfrac{\partial v}{\partial x} \\ \dfrac{\partial z}{\partial y}=\dfrac{\partial f}{\partial u} \cdot \dfrac{\partial u}{\partial y}+\dfrac{\partial f}{\partial v} \cdot \dfrac{\partial v}{\partial y}=f_{1}^{\prime} \cdot \dfrac{\partial u}{\partial y}+f_{2}^{\prime} \cdot \dfrac{\partial v}{\partial y} \end{array} $$规律是显而易见的:

简言之,多元复合函数对变元 $x$ 求导,就是找出 $x$ 的所有依赖路径,并对依赖路径进行链式求导。

矩阵和向量对标量的求导

矩阵对标量的求导,其实就是让矩阵每个元素对标量求导,很简单,仅给出几个性质:

i. $\quad \dfrac{d}{d t}[c \vec{r}(t)]=c \vec{r}^{\prime}(t)$ ii. $\dfrac{d}{d t}[\overrightarrow{\mathbf{r}}(t) \pm \overrightarrow{\mathbf{u}}(t)]=\overrightarrow{\mathbf{r}}^{\prime}(t) \pm \overrightarrow{\mathbf{u}}^{\prime}(t) \quad$ Sum and difference iii. $\dfrac{d}{d t}[f(t) \overrightarrow{\mathbf{u}}(t)]=f^{\prime}(t) \overrightarrow{\mathbf{u}}(t)+f(t) \overrightarrow{\mathbf{u}}^{\prime}(t) \quad$ Scalar product iv. $\dfrac{d}{d t}[\overrightarrow{\mathbf{r}}(t) \cdot \overrightarrow{\mathbf{u}}(t)]=\overrightarrow{\mathbf{r}}^{\prime}(t) \cdot \overrightarrow{\mathbf{u}}(t)+\overrightarrow{\mathbf{r}}(t) \cdot \overrightarrow{\mathbf{u}}^{\prime}(t) \quad$ Dot product v. $\dfrac{d}{d t}[\overrightarrow{\mathbf{r}}(t) \times \overrightarrow{\mathbf{u}}(t)]=\overrightarrow{\mathbf{r}}^{\prime}(t) \times \overrightarrow{\mathbf{u}}(t)+\overrightarrow{\mathbf{r}}(t) \times \overrightarrow{\mathbf{u}}^{\prime}(t) \quad$ Cross product vi. $\dfrac{d}{d t}[\overrightarrow{\mathbf{r}}(f(t))]=\overrightarrow{\mathbf{r}}^{\prime}(f(t)) \cdot f^{\prime}(t) \quad$ Chain rule vii. If $\overrightarrow{\mathbf{r}}(t) \cdot \overrightarrow{\mathbf{r}}(t)=c$, then $\overrightarrow{\mathbf{r}}(t) \cdot \overrightarrow{\mathbf{r}}^{\prime}(t)=0$

标量可以视作 1x1 矩阵,向量可以视作 1xN 矩阵,所以我们主要讨论矩阵对向量、矩阵的求导。

分子布局和分母布局

简言之:

分子布局(numerator layout)就是分子保持原样,分母转置。

分母布局(denominator layout)就是分母保持原样,分子转置。

一般认为,列向量是“原样”,行向量是转置

实值函数对向量的求导

$$ {\displaystyle \mathbf {x} ={\begin{bmatrix}x_{1}&x_{2}&\cdots &x_{n}\end{bmatrix}}^{\mathsf {T}}} $$则(分子布局) $y$ 对 $\mathbf{x}$ 求导为:

$$ {\displaystyle {\frac {\partial y}{\partial \mathbf {x} }}={\begin{bmatrix}{\frac {\partial y}{\partial x_{1}}}&{\frac {\partial y}{\partial x_{2}}}&\cdots &{\frac {\partial y}{\partial x_{n}}}\end{bmatrix}}.} $$和梯度的关系:

$$ {\displaystyle \nabla f={\begin{bmatrix}{\frac {\partial f}{\partial x_{1}}}\\\vdots \\{\frac {\partial f}{\partial x_{n}}}\end{bmatrix}}=\left({\frac {\partial f}{\partial \mathbf {x} }}\right)^{\mathsf {T}}} $$和方向导数的关系:

已知方向导数定义为 ${\displaystyle \nabla _{\mathbf {u} }{f}(\mathbf {x} )=\nabla f(\mathbf {x} )\cdot \mathbf {u} }$,因此方向导数又可以写作 ${\displaystyle \nabla _{\mathbf {u} }f=\left({\frac {\partial f}{\partial \mathbf {x} }}\right)^{\top }\mathbf {u} } $

运算法则:

- 线性(很像概率论的独立性?)

即满足 $R(f+g) = R(f) + R(g)$,柯西等式。

乘法法则:

$$ \frac{\partial f(\mathbf{x}) g(\mathbf{x})}{\partial \mathbf{x}}=f(\mathbf{x}) \frac{\partial g(\mathbf{x})}{\partial \mathbf{x}}+\frac{\partial f(\mathbf{x})}{\partial \mathbf{x}} g(\mathbf{x}) $$即满足 $R(fg) = fR(g) + gR(f)$

向量值函数对向量求导

${\displaystyle \mathbf {y} ={\begin{bmatrix}y_{1}&y_{2}&\cdots &y_{m}\end{bmatrix}}^{\mathsf {T}}}$ (其中 $y_i$ 为函数)对向量 ${\displaystyle \mathbf {x} ={\begin{bmatrix}x_{1}&x_{2}&\cdots &x_{n}\end{bmatrix}}^{\mathsf {T}}}$ 求导,分子布局(转置分母 $\mathbf{x}$)结果为:

$$ {\displaystyle {\frac {\partial \mathbf {y} }{\partial \mathbf {x} }}={\begin{bmatrix}{\frac {\partial y_{1}}{\partial x_{1}}}&{\frac {\partial y_{1}}{\partial x_{2}}}&\cdots &{\frac {\partial y_{1}}{\partial x_{n}}}\\{\frac {\partial y_{2}}{\partial x_{1}}}&{\frac {\partial y_{2}}{\partial x_{2}}}&\cdots &{\frac {\partial y_{2}}{\partial x_{n}}}\\\vdots &\vdots &\ddots &\vdots \\{\frac {\partial y_{m}}{\partial x_{1}}}&{\frac {\partial y_{m}}{\partial x_{2}}}&\cdots &{\frac {\partial y_{m}}{\partial x_{n}}}\\\end{bmatrix}}} $$简言之,就是 批量将实值函数对向量进行求导。

实值函数对矩阵的求导

设标量函数 $y(\mathbf{X})$,矩阵 $\mathbf{X}$,则

$$ \nabla_{\mathbf{X}} y(\mathbf{X}) = {\displaystyle {\frac {\partial y}{\partial \mathbf {X} }}={\begin{bmatrix}{\frac {\partial y}{\partial x_{11}}}&{\frac {\partial y}{\partial x_{21}}}&\cdots &{\frac {\partial y}{\partial x_{p1}}}\\{\frac {\partial y}{\partial x_{12}}}&{\frac {\partial y}{\partial x_{22}}}&\cdots &{\frac {\partial y}{\partial x_{p2}}}\\\vdots &\vdots &\ddots &\vdots \\{\frac {\partial y}{\partial x_{1q}}}&{\frac {\partial y}{\partial x_{2q}}}&\cdots &{\frac {\partial y}{\partial x_{pq}}}\\\end{bmatrix}}} $$简言之,就是 批量将实值函数对矩阵各个元素进行求导。

迹就是主对角线和:$\operatorname{tr}(\mathbf{A}) = \mathbf{A}_{1, 1} + \mathbf{A}_{2, 2} + \cdots + \mathbf{A}_{n, n} $

方向导数:

$$ {\displaystyle \nabla _{\mathbf {Y} }f=\operatorname {tr} \left({\frac {\partial f}{\partial \mathbf {X} }}\mathbf {Y} \right)} $$由于有:

$$ \frac{\partial \mathbf{a}^{\mathsf T} \mathbf{X} \mathbf{b}}{\partial X_{i j}}=\frac{\partial \sum_{p=1}^{m} \sum_{q=1}^{n} a_{p} X_{p q} b_{q}}{\partial X_{i j}}=\frac{\partial a_{i} X_{i j} b_{j}}{\partial X_{i j}}=a_{i} b_{j} $$因此

$$ \frac{\partial \mathbf{a}^\mathsf T\mathbf{X}\mathbf{b}}{\partial \mathbf{X}} = ab^{\mathsf T} $$以上是基本的定义。今后遇到具体的例子和方法技巧,将在此处贴上。

参考

(0)机器学习中的矩阵向量求导(四) 矩阵向量求导链式法则 - 刘建平Pinard - 博客园 (cnblogs.com)(重要)

(1)13.2: Derivatives and Integrals of Vector Functions - Mathematics LibreTexts

(2)Matrix calculus - Wikipedia

(3)矩阵求导总结(一) | Dwzb’s Blog (wzbtech.com)

(4)calculus - Proving $f'(1)$ exist for $f$ satisfying $f(xy)=xf(y)+yf(x)$ - Mathematics Stack Exchange 有点意思